Ceci N'est Pas Une Cheerio

I was searching for a pre-trained model to draw bounding boxes around elements of a cereal box. I kept receiving a very frustrating answer. Even though many, if not all, of these models were specifically familiar with the concept of food, the response was often a very confident “poster” or “flyer” tag around the entire box.

Upon searching to see how others have circumvented this issue I started to notice a peculiar pattern in the behavior of neural nets.

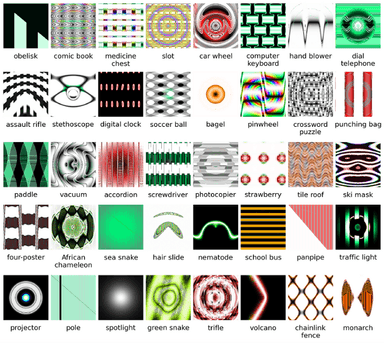

A curious example of a machine’s creative solution-ing was an image classifying DNN (deep neural network) which classified nonsense images as real and recognizable objects with very high confidence.

Looking at the results, they read a lot more like Rorschach test responses than matter-of-fact classifications. Most people would look at these images and label each of them some varietal of “random shapes and colours”. The machine, however, would latch on to very slight likenesses, some of which are notably imperceptible to humans, and arrive at creative looking solutions.

Image from Deep Neural Networks are Easily Fooled:

High Confidence Predictions for Unrecognizable Images

Even when trained with the knowledge that these generated images are nonsense, there were always other equally bizarre images that the machine would confidently classify.

Ingenuous Ingenuity

These interesting solutions are incredibly evocative of the mindset of divergent thinkers. Divergent thinkers are individuals who, when solving a problem, are unrestricted by assumptions or reality.

Ken Robinson, in his TED Talk, Do Schools Kill Creativity?, posed the hypothetical question: “How many uses can you think of for a paperclip?”. A normal person could maybe think of a few dozen uses but a divergent thinker could arrive at hundreds of different uses for a paperclip. That’s because divergent thinkers don’t interpret the word “paperclip” with such a strict definition.

They don’t make what would seem like obvious assumptions to most people. What if the paperclip is fifty feet long and made of wood? The demographic which overwhelmingly represents divergent thinkers is kindergarten-age children.

Shortcode: [interact id="5d78fa16a6272200147a8d39" type="quiz"]

You're Not Wrong

Dermatologists at Stanford University were training a neural net to determine whether a picture of a dermal lesion was skin cancer. Initial test ended up doing well enough until they realized that the machine would classify any image as potential skin cancer if there was a ruler in the photo.

Apparently, dermatologists tended to put rulers in photos “only for lesions that were a cause for concern” which created a cheap way out for the neural net.

Another AI was successfully trained to play old school side scrolling video games with the goal to “increase the score”. Its success was far more eerie when it learned to play Tetris with a goal to “not lose”. It found the perfect way to do it: by pausing the game indefinitely right before it was about to lose.

My experience is neither the first nor last instance of childish behaviour being exhibited in artificially intelligent machines. In knowing this would hardly be the last brush I will have with managing divergent thought, I will need to construct an overarching approach to streamline workflow in future endeavours. An approach which I outline in part two of this article.